Guide

Evaluating early intervention programmes: Six common pitfalls, and how to avoid them

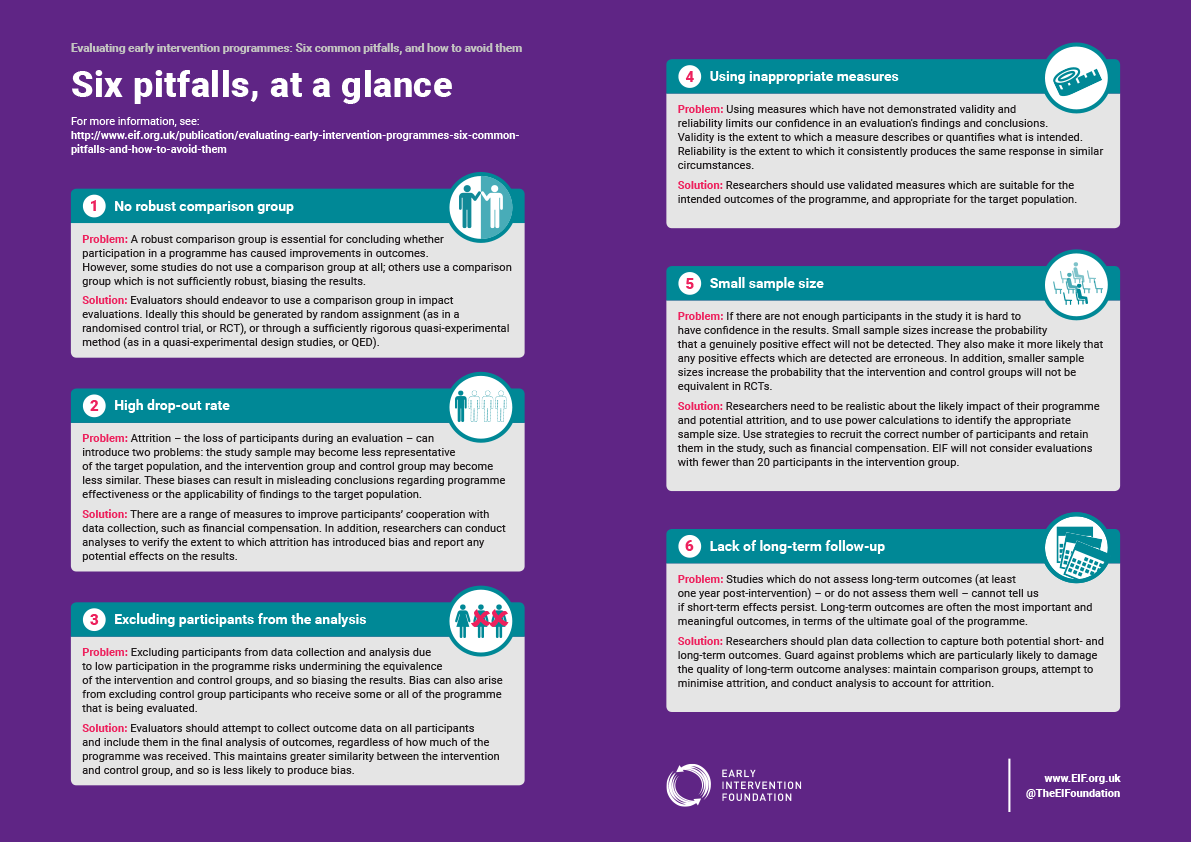

This handy guide provides guidance on addressing six of the most common issues we see in our assessments of programme evaluations, including explanations of how these problems undermine confidence in a study’s findings, how they can be avoided or rectified, case studies and a list of useful resources in each case.

Whether you are involved in commissioning, planning or delivering evaluations of early intervention, these are the issues to understand and watch out for.

Why does avoiding these common pitfalls in evaluation matter?

High-quality evidence on ‘what works’ plays an essential part in improving the design and delivery of public services, and ultimately outcomes for the people who use those services. Early intervention is no different: early intervention programmes should be commissioned, managed and delivered to produce the best possible results for children and young people at risk of developing long-term problems.

EIF has conducted over 100 in-depth assessments of the evidence for the effectiveness of programmes designed to improve outcomes for children. These programme assessments consider not only the findings of the evidence – whether the evidence suggests that a programme is effective or not – but also the quality of that evidence. Studies investigating the impact of programmes vary in the extent to which they are robust and have been well planned and properly carried out. Less robust and well-conducted studies are prone to produce biased results, meaning that they may overstate the effectiveness of a programme. In the worst case, less robust studies may mislead us into concluding a programme is effective when it is not effective at all. Therefore, to understand what the evidence tells us about a programme’s effectiveness, it is also essential to consider the quality of the process by which that evidence has been generated.

How does this guide help?

In this guide, we identify a set of issues with evaluation design and execution that undermine our confidence in a study’s results, and which we have seen repeatedly across the dozens of programme assessments we have done to date. To help address these six common pitfalls, we provide advice for those involved in planning and delivering evaluations – for evaluators and programme providers alike – which we hope will support improvements in the quality of evaluation in the UK, and in turn generate more high-quality evidence on the effectiveness of early intervention programmes in this country.