Blog

No time like the present: using EIF’s practical evaluation guide to understand the impact of local support for reducing parental conflict

With the launch of our reducing parental conflict (RPC) practical evaluation guide at the end of March and the announcement in April of a further £4m funding by the Department for Work and Pensions to train frontline staff to deliver parental conflict specialist interventions and related support to parents, Max Stanford and Helen Burridge explain why now is an opportune time for local areas to take active steps to evaluate their work in this important and growing area of early intervention.

Understanding the effectiveness of ongoing support to reduce the impact of conflict between parents on children will be critical as local areas continue to face increasing pressures on services and budgets, exacerbated by the Covid-19 pandemic and its consequences.

From our work with local areas, we know that how this support is being provided and what impact it is having are questions that those who are commissioning and delivering RPC support frequently struggle to answer. Many feel uncertain about how to start thinking about evaluation, and that the methods and language of evaluation fall outside their experience and expertise.

To try to plug this gap, in late March we published our first practical evaluation guide for local areas, aimed at supporting them on their RPC evaluation journey. The guide is designed to be both accessible and comprehensive, breaking down the process of evaluation into four key modules.

Each module breaks the process down further into a series of tasks, each with easy-to-implement advice, tips and tools.

The process starts with important decisions about the evaluation’s resourcing and management, by assigning an evaluation lead, a senior responsible owner and/or a steering group.

Module 1: Deciding what to evaluate

In our experience, areas often have not comprehensively mapped out the full set of local RPC interventions and their intended outcomes. Identifying all the relevant interventions – that is, all those activities with a beginning, middle and end, a set sequence and eligibility requirements – is essential. This includes those where practitioners work directly with families, or indirectly, such as through training practitioners.

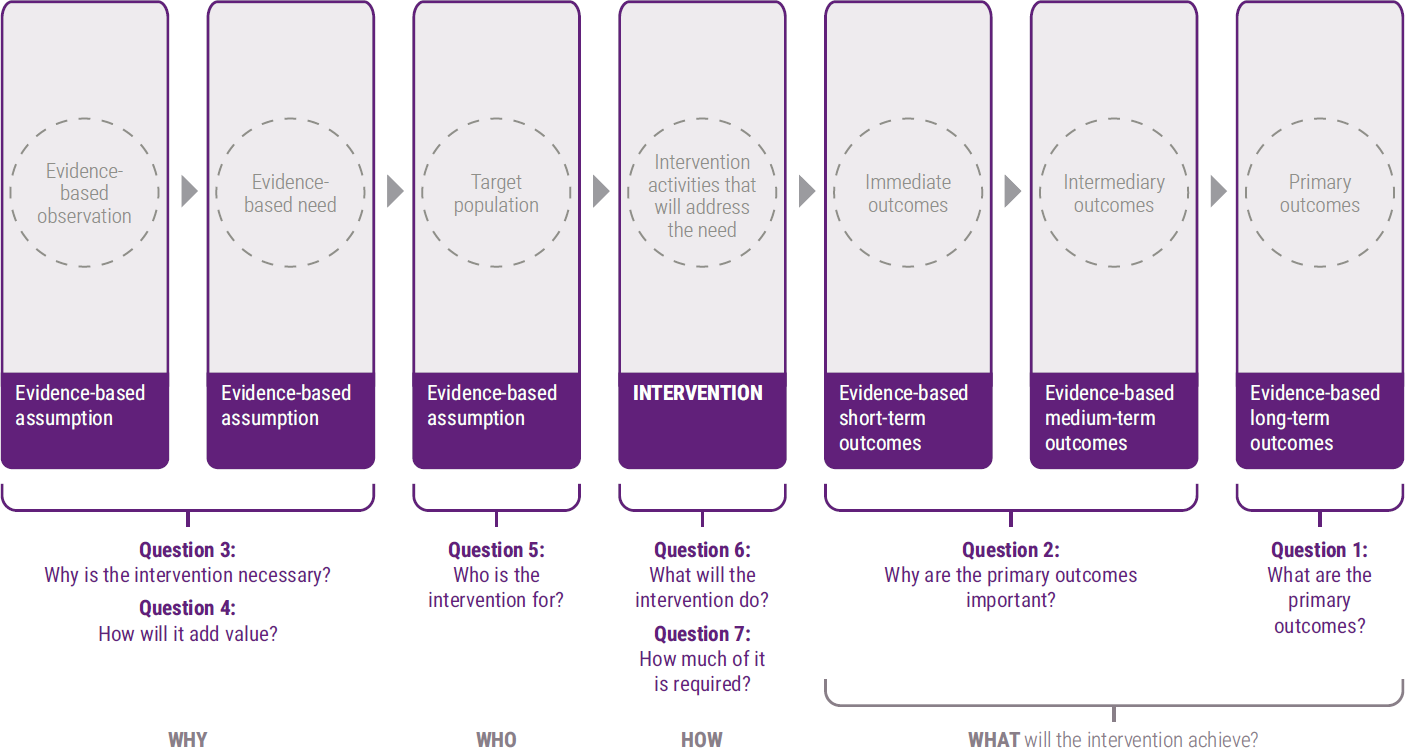

To help with this, we have provided templates on intervention mapping, to sketch out the system, and on theory of change (as shown below), to identify the intervention outcomes. Local areas have also found these resources to be incredibly beneficial in strategic decision-making and building local area partnerships.

Download our theory of change template as a fillable PDF.

The guide provides 12 editable and easy to use templates, ranging from logic models to survey templates, topic guides and an evaluation report template, with detailed worked examples to help readers complete each template.

Module 2: Planning how to evaluate

We know that some evaluation terms can be unfamiliar to those working in local areas, which is why the guide provides definitions and descriptions of key concepts.

This includes the important differences between an impact evaluation and a process evaluation:

- An impact evaluation looks at whether intended changes have occurred. The guide recommends conducting a pilot for outcomes, which means using validated measures with participants both before and after the intervention to investigate whether the intervention has the potential to improve its intended outcomes. After this, we recommend conducting a robust evaluation to understand whether the intervention has caused any changes.

- A process evaluation looks at whether an intervention is being implemented as intended, to understand how an intervention is working and why.

For interventions working directly with families affected by parental conflict, the guide recommends conducting a pilot for outcomes to explore the potential for impact, alongside a process evaluation to explore aspects of implementation. For interventions that are not working directly with those affected by parental conflict, such as practitioner training, we recommend a process evaluation only, as understanding the impact of this kind of activity on child outcomes will require more advanced evaluation methods.

The guide also provides a host of practical advice on a range of issues, such as data protection and project management, which are critical to running a local evaluation but are easily overlooked or neglected.

Module 3: Undertaking an evaluation

In developing the guide, we worked closely with six local areas. These experiences formed the basis of practice examples sprinkled throughout the guide, which provide three distinct narratives to illustrate how areas can approach evaluation at different stages of the RPC journey. The three fictionalised locations – Newborough, Oldtown and Seaport – highlight experiences, tips and lessons from areas that are, respectively, ‘just starting out’, ‘making good progress’, and ‘becoming established’ in their RPC plans and activities.

Alongside these examples and explainers on key concepts, the guide includes downloadable templates that provide the tools needed to collect comprehensive and accurate evaluation data through a range of methods, including surveys, interviews and focus groups, depending on the scope and type of evaluation.

Module 4: Evaluation reporting

Evaluation is not only about the collection of data, but also about reporting valuable information and having it used. The guide provides advice on how to interpret and disseminate evaluation findings, considering who your audience is, how they will use the evaluation findings and when – for example, at which key planning or decision-making moments in the year. Practical tips include, for example, how to identify what senior decision-makers will need from your evaluation report, as opposed to practitioners, other partners across the local area, or families and other beneficiaries.

Why begin evaluation now?

Recent research published by the Department of Work and Pensions shows that nearly 9 in 10 councils believe RPC practitioner training is important to embedding support into their services, and feel positive about the national RPC programme’s potential to improve outcomes for children in their area. With nearly £4 million in additional funding for training now available for councils to bid for, it is critical that areas are able to effectively demonstrate the difference that training can make and understand whether their local programmes are improving outcomes for children. A focus on evaluation is the only way this can be achieved.

We believe our practical guide provides what local areas need to evaluate their local RPC offer in a way that is straightforward and comprehensive. We designed the guide with substantial input from the local areas we have been working with. But we want the evaluation guide – like the RPC commissioner guide that came before it – to develop and evolve based on what local areas need. Have you used the guide, how have you used it, and is there anything we have missed out? Which aspects should we focus on developing further? If you have any feedback or questions, please do get in touch.

To enhance the guide, we will be publishing case studies that detail how a number of local areas have begun to evaluate their RPC interventions, which we hope will provide more insight into planning and conducting evaluations, as well as producing a wider range of resources and continuing work to support local areas. In order to identify local authorities’ support needs, over the next month we will be launching an online survey and conducting focus groups with local users of EIF RPC tools and resources.